Dev log Week 01: Testing developing environments

Welcome to the first developer log of our project!

This week we mainly focused on choosing the developing platform and the general art style of our game.

We researched workflows in unreal and unity to see which engine would best suit our game, our skills and our time frame. We looked at both engines from the perspective of a programmer and the perspective of an artist/technical artist.

Technical art

On the side of the artists, we wanted to compare some workflows between unreal and unity, mainly the workflows of particle FX, post processing, shaders and materials.

- Particle FX

- Unreal

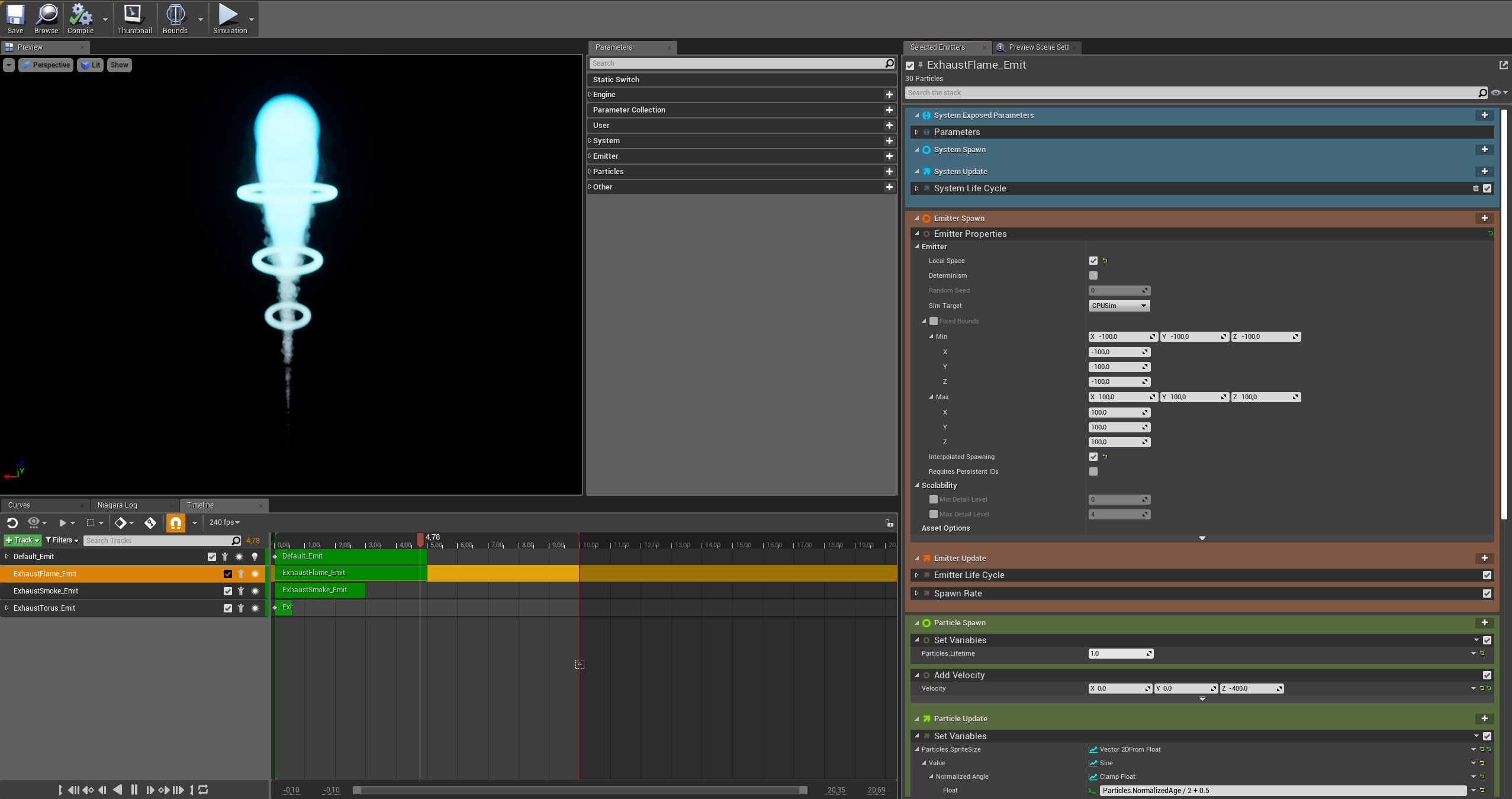

- Particle FX in unreal are made with the niagara system.

- Pro's

- It's the workflow the artists are most experienced with

- The Houdini for niagara plugin let's you further customize particle effects beyond the default options with node based programming

- Con's

- Choosing unreal can limit interactions between player and FX because of the added difficulty for programmers

- Pro's

- Particle FX in unreal are made with the niagara system.

- Unity

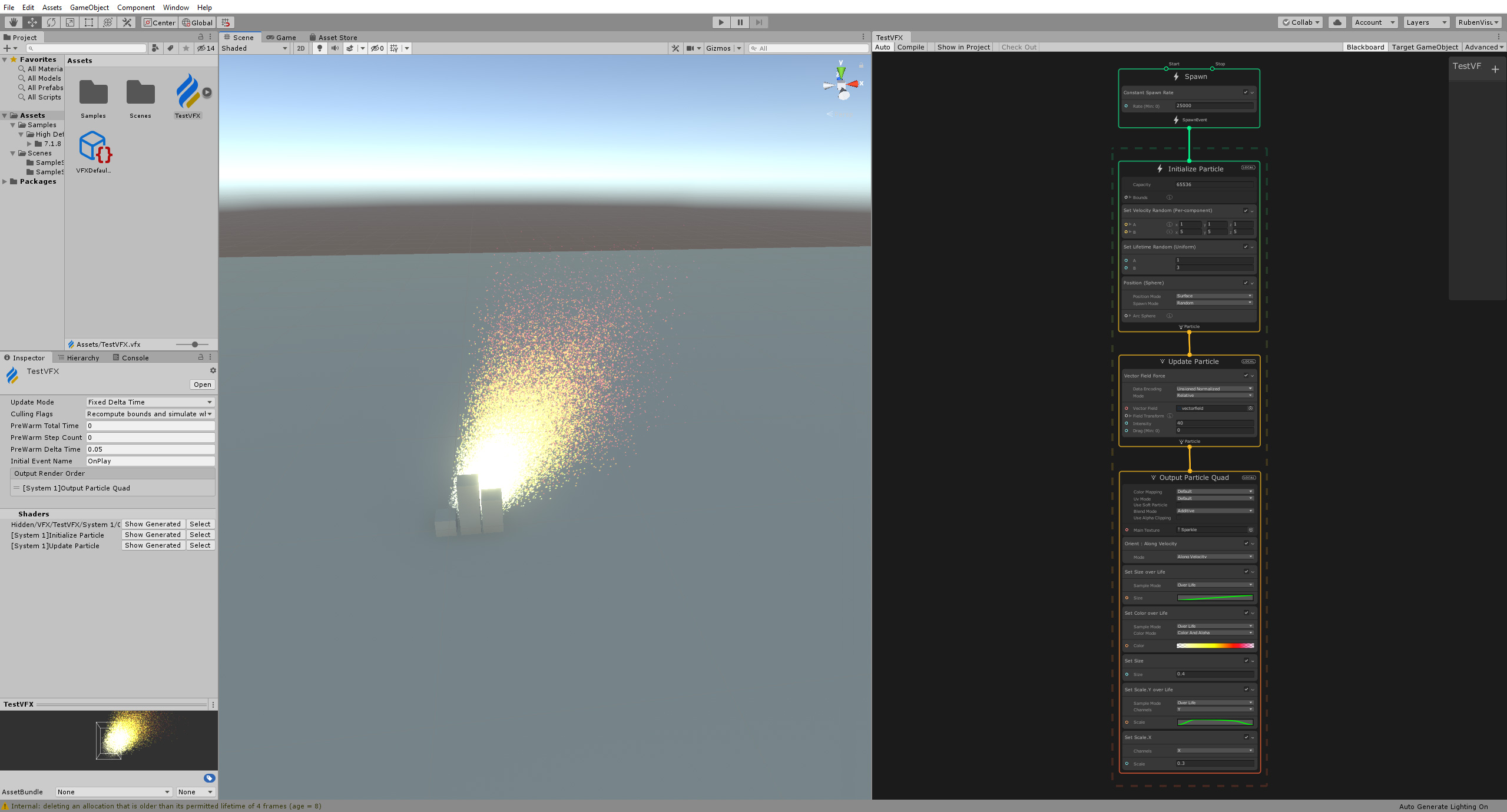

- Particle FX in unity can be made with the built-in particle system or with the newer visual effect graph

- Pro's

- The built-in particle system shares some of similarities with the niagara system from unreal

- The visual effect graph uses the gpu and allows for spawning millions of particles without a serious impact on performance

- Con's

- The built-in particle system is getting older and the visual effect graph, which would probably not be the best choice for our project, is being pushed more and more by unity

- The visual effect graph uses a radically different workflow in comparison to niagara

- Pro's

- Particle FX in unity can be made with the built-in particle system or with the newer visual effect graph

- Unreal

- Shaders and materials

- Unreal

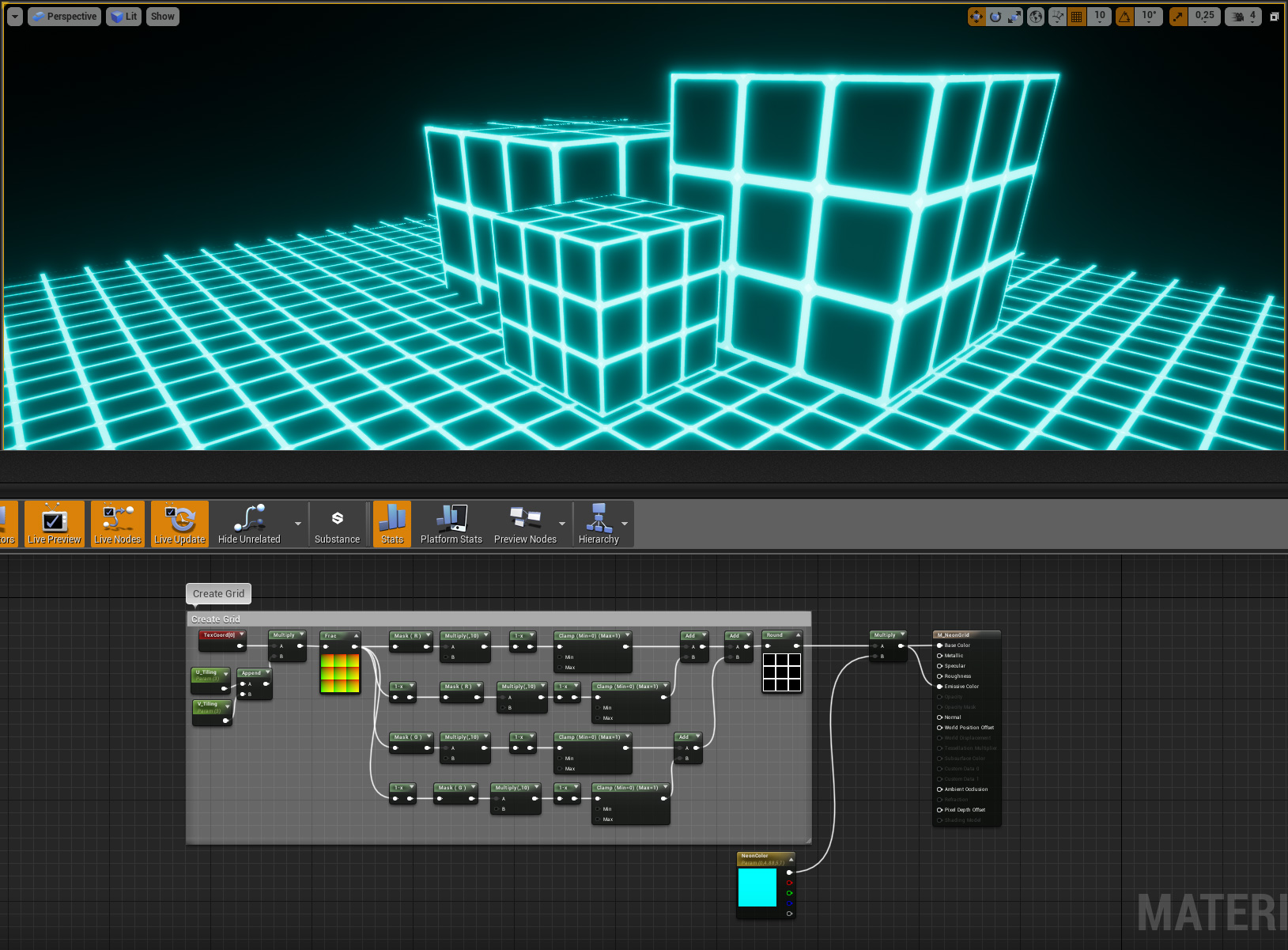

- Shaders are made with node based programming. Material instances are made from these shaders and applied to objects.

- Pro's

- It's the workflow the artists are most experienced with

- Because of the time spent in the past in this workflow, we have amassed a library of ready to go shaders

- Pro's

- Shaders are made with node based programming. Material instances are made from these shaders and applied to objects.

- Unity

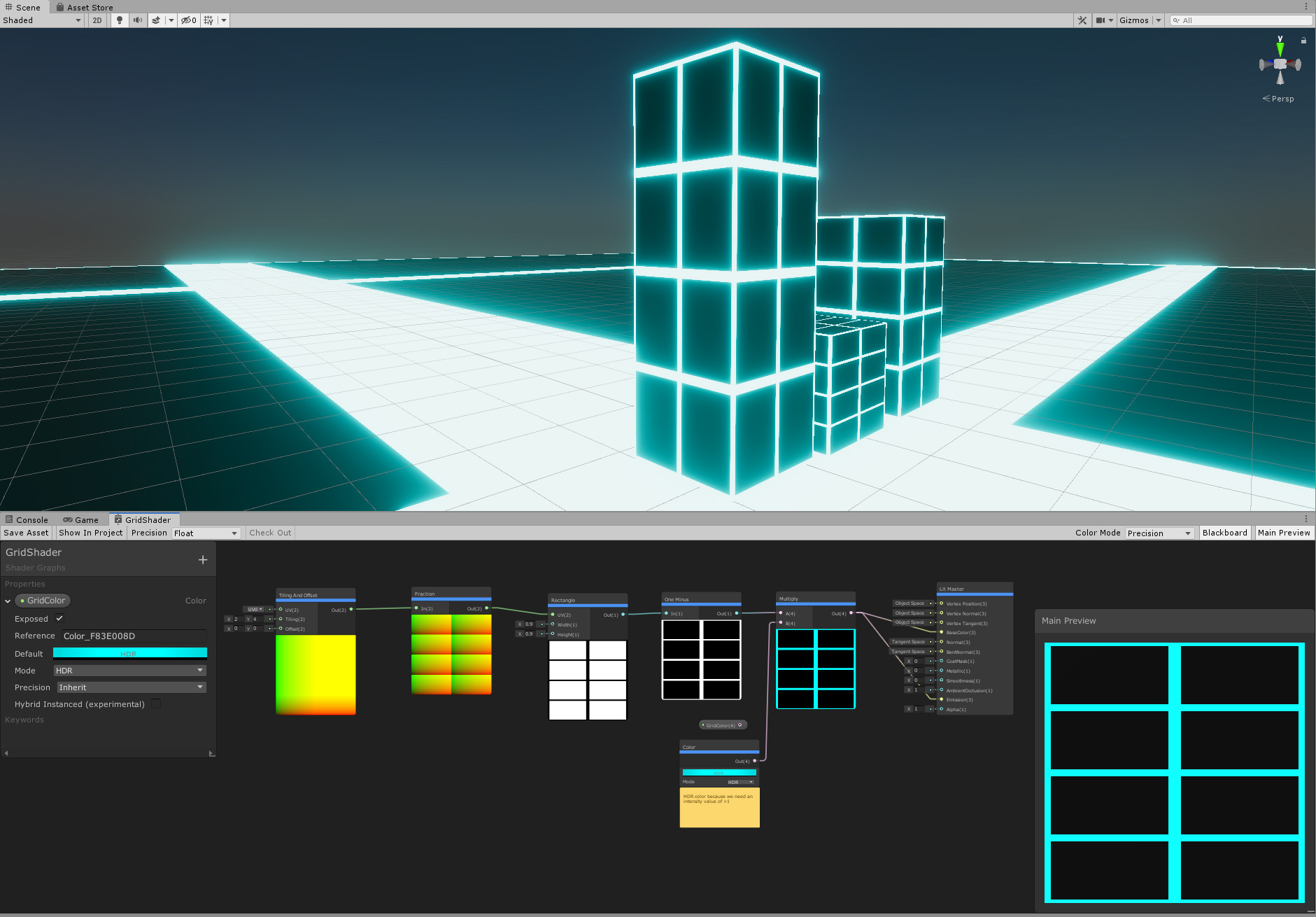

- Shaders are made with the shader graph. The creation of the materials depend on the rendering pipeline chosen at the start of development

- Pro's

- The workflow is almost identical to the unreal workflow and sometimes even has some QOL nodes unreal lacks

- Creating parameters from variables is very intuitive

- Con's

- Though the shadergraph feels nice to make simple materials, it feels less flexible than the unreal node based shader sytem

- The shadergraph is relatively new and thus makes for some frustrating moments when looking up documentation or tutorials, especially when combined with the high defenition render pipeline

- The shadergraph system doesn't look bug free as we encountered some bugs with making parameters out of color nodes

- Pro's

- Shaders are made with the shader graph. The creation of the materials depend on the rendering pipeline chosen at the start of development

- Unreal

- Post processing

- Unreal

- Post processing in Unreal is handled by post process volumes which are placed as volumes in your level

- Pro's

- Once again, it's the workflow artists are most familiar with

- Having your post processing limited to volumes which you can physically place in your level makes for a very intuitive workflow as you can see in the editor what area will be affected

- All of your post processing is found in one spot, the post process volume itself

- Lots of built in options for post processing

- Con's

- Having all the options in your post process volume at all times can be a bit overwhelming

- Pro's

- Post processing in Unreal is handled by post process volumes which are placed as volumes in your level

- Unity

- Post processing in unreal depends on the rendering pipeline. By default, it is handled by a post processing volume and a post processing layer attached to the camera.

- Pro's

- Option to work on a post processing per object basis with the layer system

- When using the high definition render pipeline, the workflow is very similar to the workflow in unreal

- You only add the effects you need to your volume, keeping your post processing volume clean

- Con's

- Because of the multiple rendering pipelines that use different workflows for post processing, looking up info gets really confusing as the used rendering pipeline is not always mentioned

- Post processing effects seem limited compared to post processing in unreal

- Pro's

- Post processing in unreal depends on the rendering pipeline. By default, it is handled by a post processing volume and a post processing layer attached to the camera.

- Unreal

Art

For the art style we decided to work separately in the first week of prototyping to create different ideas. Each of us artists made a mood board with different Ideas and concepts. Individually we chose one of our ideas / concepts which we then expanded on. We worked out some first drafts of one art bible per artist to show what kinds of moods, ideas, environments or player characters we would like to see with our current Game mechanics.

In the next meeting we will be able to decide on which theme will fit best for our game mechanics and target audience. Depending on the category we might also combine different ideas or apply concepts from one art bible to another.

We will need to still finish one fitting art bible document but by taking different art style paths, we created enough variety to be sure that we don’t get stuck with the first idea, but create something good.

Programming

On the side of the programmers, we wanted to see which engine would fit the best for our game. Both of our programmers had experience with Unity, but neither of them had experience with Unreal Engine C++. One programmer focused on Unity, and the other one on Unreal Engine.

- Unreal Engine

- Prototypes:

- I created a prototype to test input & actor movement.

- After the single player input prototype, I also created a prototype for multiple players

- The most difficult part about this prototype was to find out how the how the physical controller reacted with the Unreal Engine Player Controller. At first I assumed that you could send input from 1 physical keyboard to 2 Player Controllers (by using the Player Controller ID as a unique identifier, so one Player Controller could use WASD and the other one the arrow keys), but this wasn't the case. Then I rewrote everything and tried it out with 2 physical controllers. I finally was able to move each player by moving the thumbstick on the corresponding physical controller.

- Right now I'm working on expanding the previous (multiplayer) prototype so the camera adjusts the where all the players are in the world. I immediately ran into a problem (which isn't solved yet) that getting all the actors of a specific class in Unreal Engine C++ is more difficult than I thought. I found a simple function in the documentation which does this, but I haven't been able to figure out how to use is correctly. Right now when the function executes, it sometimes crashes.

- Difficulties:

- While working on the prototypes, the Unreal Engine forum and answer pages were offline. This made finding solutions to problems a lot more difficult. While writing this devlog I noticed that the pages are back online, so that'll make learning the Unreal Engine C++ a lot easier.

- Prototypes:

Get Boomers

Boomers

| Status | On hold |

| Authors | JonasBriers, Steff Feyens, ElineMelis, HannahGeilen, RubenVO |

More posts

- Dev log Week 11: At the finish lineMay 25, 2020

- Dev log Week 10: Is this the end?May 13, 2020

- Dev log Week 09: Polish and optimizationMay 06, 2020

- Dev log Week 08: End of Production, Start of polishApr 29, 2020

- Dev log Week 007: Quarantino RoyaleApr 22, 2020

- Dev log Week 06: The beginning of the endMar 31, 2020

- Dev log week 05: Development week 2: Electric boogalooMar 24, 2020

- Dev log week 04: First week of developmentMar 17, 2020

- Dev log Week 03: From prototyping to developmentMar 10, 2020

- Dev log Week 02: PrototypingMar 04, 2020

Leave a comment

Log in with itch.io to leave a comment.